Last summer, I received an email from Academia.edu with a link to an AI-generated podcast it had created based on an article I wrote about law faculty scholarly visibility. I listened, and I wasn’t impressed. The AI narrator mischaracterized my arguments and even fabricated a plug for Academia.edu’s platform as though I had said it. I wrote up my evaluation in a previous WisBlawg post, comparing it to a podcast I generated myself using Google’s NotebookLM. The quality difference was significant.

That post caught the attention of a reporter at the Chronicle of Higher Education. The resulting article, “An AI Bot Is Making Podcasts With Scholars’ Research. Many of Them Aren’t Impressed,” describes how scholars, myself included, have had concerns with Academia.edu’s AI-generated content.

From Podcasts to Comics

A few months ago, I received another email from Academia.edu — this time with an AI-generated comic strip based on a different article of mine about the origins of anti-sex trafficking law in Wisconsin’s lumber camps. That sent me down a new path, comparing their comic to one I created myself using Google Gemini’s Nano Banana image generator.

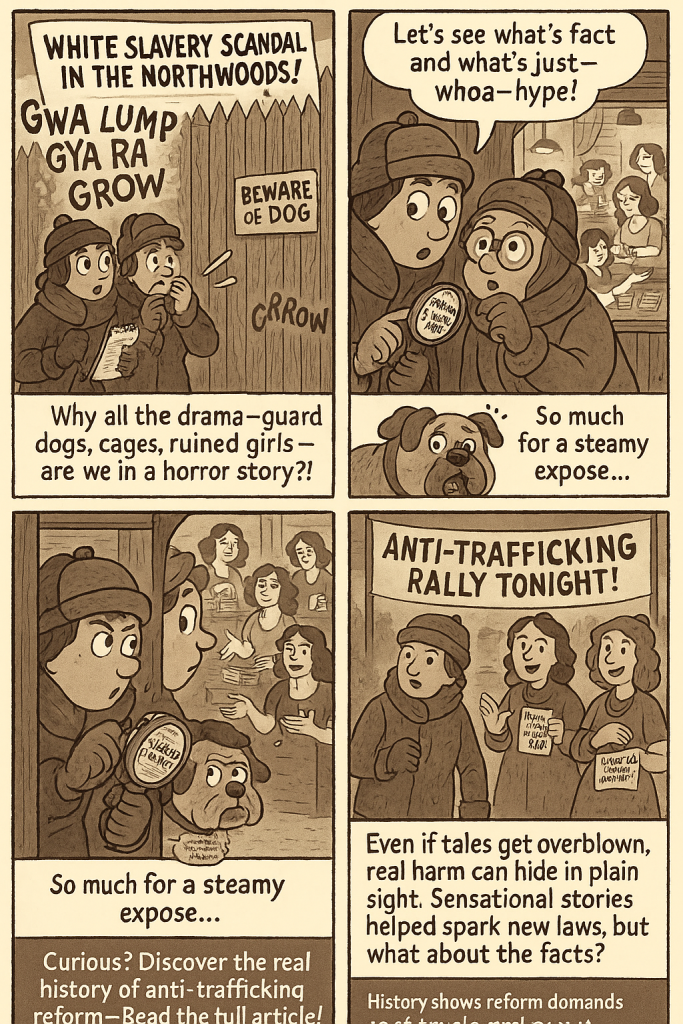

The Academia.edu comic has misspellings, duplicated text, and cut-off captions, along with telltale AI image errors, and as the Chronicle reporter pointed out “bold type apparently meant to suggest the sounds of an assault taking place.” Yikes! The content is a surface-level treatment of my article at best. And I had no way to revise it.

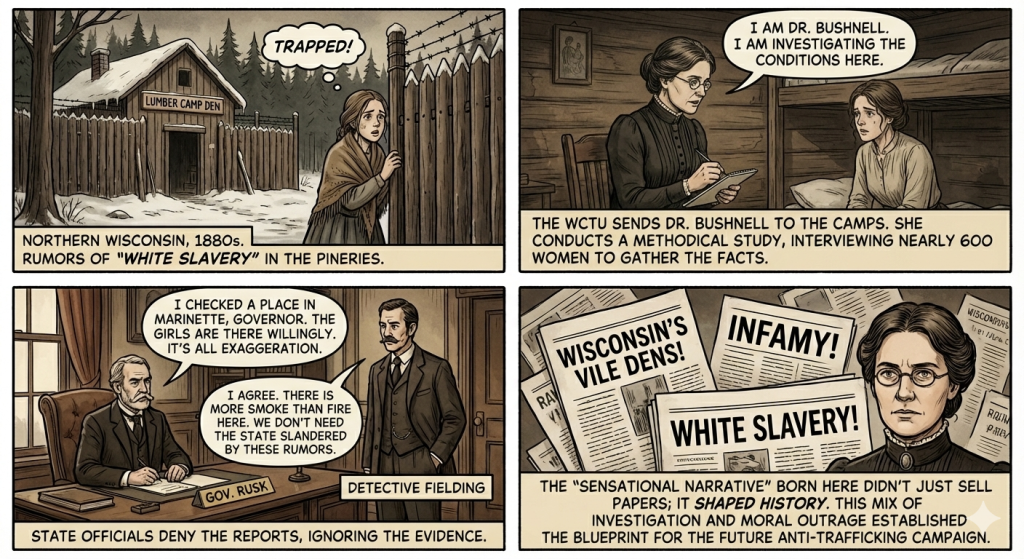

The Google Gemini Nano Banana comic is a different story. The art is clean, the text is accurate, and it represents the substance of my article pretty well for a 4 panel comic. I was able to edit the content to better reflect my work. The only error I couldn’t correct was that the speech bubbles in one panel point to the wrong characters — a minor issue compared to the problems in the Academia.edu version.

Credit Where It’s Due

I give Academia.edu credit for being willing to try something new. Using AI to make academic research more accessible and visible to broader audiences is a promising idea, and the tools are getting better. Google’s NotebookLM and Nano Banana both produced quality results when I tested them with my own scholarship. The potential is there. The execution just needs to catch up.

Advice for Academia.edu

If Academia.edu intends to continue exploring AI to promote authors’ scholarship, I’d suggest focusing on three things:

First, quality. Does the AI-generated content accurately represent the author’s scholarship? If it distorts the research, it does more harm than good to scholarly visibility — and to the author’s reputation.

Second, transparency. AI-generated media about scholarly work needs to be clearly and prominently labeled as such. Listeners, viewers, and readers should know immediately that what they’re consuming was generated by AI, not by the author.

Third – and this is a big one, author control. Authors should be able to approve whether AI-generated content is created from their work and should be able to revise it before it goes public. Academia.edu does allow authors to approve whether the content displays on their profile, which is a good start. They’re also working on allowing authors to revise the generated content, but that feature isn’t available yet. And note that only authors with a paid subscription to Academia have the option for AI-generated promotion.

AI-generated media has potential for improving how scholarly work reaches new audiences. Getting there means getting the quality right, labeling it clearly, and letting authors steer it.

Disclosure: This post was developed by the author with organizational and drafting assistance from Claude AI. All content was reviewed and refined for accuracy.